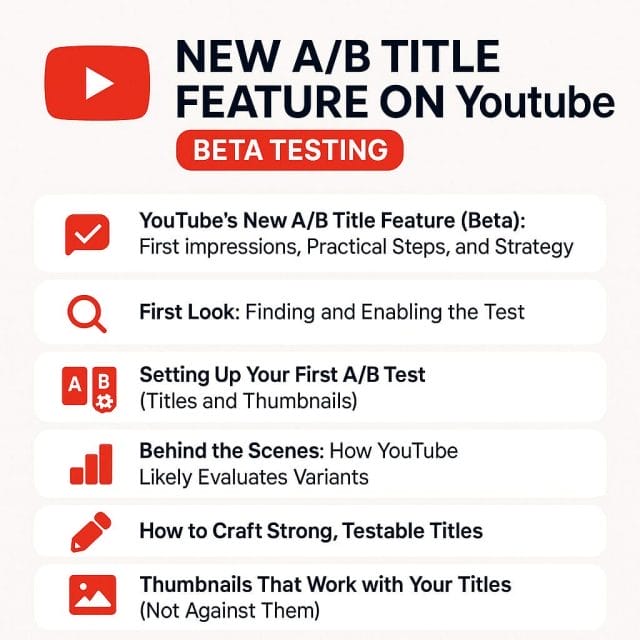

Every now and then YouTube ships something that quietly reshapes how creators test, iterate, and grow. The newly surfaced A/B testing option for titles (with the ability to test thumbnails as well) is one of those features. It’s rolling out as an early access experiment in YouTube Studio, and I’ve just had my first go with it. In this post I’ll walk you through my initial experience, the steps to set up your first test, and the strategy behind running intelligent experiments that actually improve click-through rate (CTR), watch time, and overall viewer satisfaction.

Importantly, I’m not simply recapping what’s in the transcript. I’m expanding on each step to give you practical context, extra tips, and the “why” behind each decision. If you already have access, this will help you make the most of it. If you don’t, you’ll know exactly what to do when it appears in your Studio.

Why A/B Testing Titles and Thumbnails Matters

Thumbnails and titles are the front doors to your content. They shape whether someone decides to click, and in turn, they influence whether your video even gets the chance to prove its value. Historically, creators had to manually swap titles and thumbnails, wait, eyeball analytics, and hope to draw a reliable conclusion. Now YouTube is formalising experimentation within Studio—rotating variants for you and reporting back on which one performs better.

That matters because:

- It reduces guesswork. Instead of “I think this might work,” you’ll get a statistically guided result.

- It speeds up iteration. You can learn in days what used to take weeks of manual testing.

- It compounds learning. As you collect winning patterns (words, structures, colours, compositions), your creative instinct improves.

Think of this feature as a learning engine. Your goal isn’t just to “win” one test; it’s to build your personal playbook for predictable creative performance.

First Look: Finding and Enabling the Test

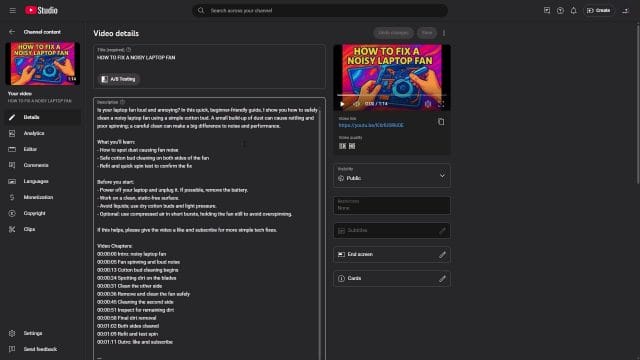

When the option appears in your Studio (it’s still in beta, so not everyone has it yet), you’ll likely see a “Try now” or “Test and compare” button in your video details or thumbnail/title section. This is YouTube’s native environment for creating and managing experiments. You can test one or more variables—most notably titles and thumbnails—and set up to three variants for each.

In my case, this is the first time I’ve used it, and it appeared as a prompt right in the interface. If you’re not seeing it:

- Make sure your YouTube Studio app is updated (desktop via browser is best for feature rollouts).

- Check your channel’s eligibility and any region-based limitations.

- Keep an eye on Creator Insider updates and Studio banners—these rollouts often happen in waves.

The key takeaway: if you have early access, jump in and explore. If you don’t, bookmark this guide for when it lands.

Setting Up Your First A/B Test (Titles and Thumbnails)

Once you click “Try now”, YouTube presents the core experiment interface: create up to three different titles, optionally pair them with up to three thumbnails, and decide whether the test focuses on “title only” or “title and thumbnail”.

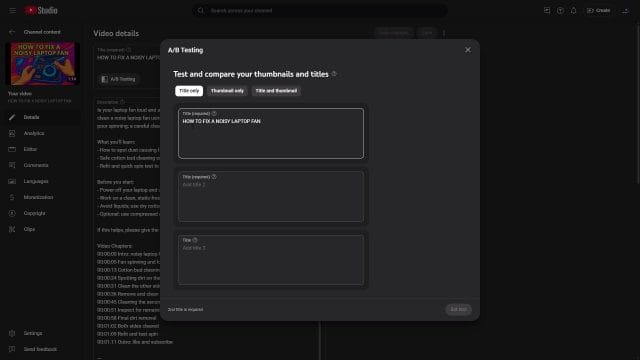

Step 1: Decide What You’re Testing—Title, Thumbnail, or Both

You’ll see that you can test only titles, only thumbnails, or both together. For clean learning, it’s often best to test one variable at a time. That way, when a variant wins, you know what drove the improvement. However, if you’re in a discovery phase or if both your title and thumbnail need a refresh, testing them together can provide a rapid improvement to your CTR. The trade-off is that it’s harder to disentangle which element did the heavy lifting.

Step 2: Add Your Title Variants

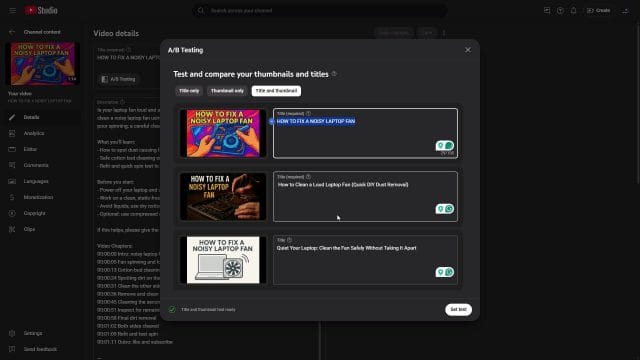

You can add three different titles. I used AI to generate multiple options quickly—I’ve actually built my own tool to generate titles and images and push them directly to YouTube. I’ll share more about that workflow in a future tutorial, but regardless of the tool, here’s how to think about variant creation:

- Make each title intentionally different. Vary structure, promise, specificity, numbers, and emotional pull.

- Stay honest. The title should set a true expectation that the content fulfils.

- Mind character count. YouTube truncates long titles on mobile, so keep the most important words up front.

- Test distinct angles. For example: “benefit-first”, “curiosity-lead”, and “problem-solution”.

At this stage, I suggest drafting at least 8–10 ideas, then shortlisting the strongest three for the test. AI can speed up this ideation, but human judgement—informed by your audience—is crucial.

Step 3: Add Thumbnail Variants (If Testing Them)

I also added two more thumbnails using my tool. Note that the title content may not match each thumbnail’s on-image text perfectly when you generate at speed. That’s okay for a rough first pass, but for future tests, aim for tighter alignment between title and thumbnail so they reinforce the same idea rather than competing. A mismatch can sometimes work if it sparks curiosity—but do it deliberately, not accidentally.

In the interface you’ll see toggles or selectors for “Title only” or “Title and thumbnail”. I initially leaned towards “Title and thumbnail” because I wanted to test both in one go and see what the system did with my inputs. Over time, I’ll likely run more focused tests so I can build a clearer playbook of what specifically moves the needle for my audience.

Step 4: Finalise and Start the Test

After selecting your variants, you set the test. The platform saves it and begins rotating variants to your audience. You won’t see instant results; the system needs traffic before it can provide meaningful insights. Patience is part of the process—rushing to judgement can lead to false positives or conclusions based on too little data.

This raises a key point: what happens after you press save? YouTube distributes impressions across variants, monitors metrics (most notably CTR and viewer retention), and eventually indicates a winner. In some cases, you might see a “no significant difference” result—which is still a useful outcome. It tells you the variations you tested didn’t materially change behaviour, so you’ll need to try more divergent options.

Step 5: Where to See Results

At first, there are no stats—especially if the video is new or traffic is light. You’ll need to give it time so YouTube can accumulate enough impressions and engagement data to call a winner. Expect to see an option within the experiment panel to view more details once data accumulates, including how each variant performed. If it’s not visible right away, it’s because the feature is in beta and data might take longer or be presented conservatively as the system calibrates.

Behind the Scenes: How YouTube Likely Evaluates Variants

While YouTube doesn’t publish every detail of its evaluation methodology, we can infer best practices from experimentation science and existing Studio analytics:

- CTR is a leading indicator. If a title/thumbnail combo gets more people to click with the same audience exposure, it’s a strong signal.

- Watch time and audience retention temper CTR. A misleading title might inflate CTR but hurt retention—YouTube should (and likely does) penalise that outcome in deciding the “winner”.

- Viewer satisfaction signals may be incorporated. Likes, comments, shares, and to some extent, session-level behaviour, all help identify whether clicks translate into meaningful engagement.

- Statistical confidence determines a result. Variants need sufficient impressions to observe a reliable difference. Patience helps avoid premature decisions.

Practically, that means your winning variant should earn its victory across both the “promise” phase (the click) and the “delivery” phase (watch time and satisfaction).

How to Craft Strong, Testable Titles

Whether you’re using AI, brainstorming manually, or mixing both, focus on variety and clarity. Here are frameworks and prompts that consistently yield strong test candidates:

Frameworks to Try

- Benefit-first: “Get [Desired Result] in [Timeframe] Without [Pain Point]”

- Outcome-plus-curiosity: “I Tried [Strategy] for 30 Days—Here’s What Happened”

- Numbered or quantified: “7 Proven Ways to [Outcome]”

- Problem-solution: “Fix [Specific Problem] in 10 Minutes (No Tools Needed)”

- Myth-busting: “[Common Belief] Is Wrong—Do This Instead”

- Shortcut or framework: “The 3-Step [Topic] System That Actually Works”

Writing Rules of Thumb

- Front-load value. Put the hook or keyword early to avoid truncation.

- Avoid vague language. “Amazing Tips!” is far less compelling than “Save £300/Month with These 5 Energy Habits”.

- Use specificity. Numbers, timeframes, and concrete outcomes win clicks—and trust.

- Keep it honest. If the content doesn’t deliver the promised outcome, viewers bounce, and future recommendations suffer.

- Minimise filler characters. Excessive punctuation or emojis can read as spammy—use sparingly and purposefully.

Pair these rules with AI ideation to quickly draft alternatives, but always edit for your voice and your audience’s expectations.

Thumbnails That Work with Your Titles (Not Against Them)

Because this new feature lets you test both titles and thumbnails, it’s tempting to change everything at once. If you do, be deliberate: your title and thumbnail should be complementary, not redundant.

- Division of labour. Let the title carry the “promise” and the thumbnail carry the “proof” or “emotion”.

- Readable text. If you add text to the thumbnail, keep it short, large, and high-contrast.

- Face and gaze. Faces with clear emotions can boost curiosity; gaze direction can guide attention to key elements.

- Colour contrast. Use colour to stand out in the feed but stay on-brand for recognisability.

- Consistency across variants. When testing titles only, keep the thumbnail constant. When testing thumbnails, keep the title constant. When testing both, make sure pairs are logical.

In my first pass, my auto-generated thumbnails didn’t perfectly match each title’s phrasing. That’s not ideal for learning, but it’s instructive: even minor misalignments create micro-friction at the moment of the click. In future tests, I’ll either keep one variable static or craft tightly paired title–thumbnail sets to isolate what’s working.

Using AI to Generate Ideas—Responsibly

AI is brilliant for breaking creative blocks and exploring angles you might not consider. I used an in-house tool to generate both titles and thumbnails, and even push variants directly to YouTube. If you’re DIY-ing without a custom tool, you can still get great results with thoughtful prompts:

- Prompt for frameworks: “Give me 10 titles for using benefit-first, curiosity, and listicle styles.”

- Constrain the length: “Keep each title under 60 characters and front-load the hook.”

- Force differentiation: “Make each title structurally distinct, not minor word swaps.”

- Target audience: “Write titles that appeal to [audience persona], who care about [outcomes] and dislike [pain points].”

Then, filter with your brand voice, ethical standards, and actual content. AI can overshoot into clickbait—your job is to pull it back to truthful, high-signal language that primes viewers for what they’ll actually see.

Interpreting Results: What “Winning” Really Means

When the data starts to populate, don’t fixate only on CTR. You want a variant that improves overall viewer experience and channel performance. Here’s how to read outcomes holistically:

- If CTR is up and retention is steady or improved, fantastic—clear winner.

- If CTR is up but retention drops, consider whether the title overpromises. You might be attracting the wrong clicks.

- If CTR is flat but retention improves, you may have a title that better qualifies the right audience. That can be a strategic win.

- If the platform can’t call a winner, your variants might be too similar. Test more divergent options next.

Also, consider timeframe and seasonality. Traffic can fluctuate by day of week, hour, and event cycles. Avoid calling results too early; let the experiment reach a meaningful sample size. If your niche has a spiky audience (e.g., educational content that peaks on Sundays), let the test span multiple typical cycles.

Best Practices and Testing Playbook

Before You Start

- Define your hypothesis. “A benefit-first title will increase CTR by 10% without hurting retention.”

- Choose your variable. Start with title-only or thumbnail-only to learn faster.

- Plan for three strong variants. Avoid micro-variations (e.g., swapping two words).

- Check your analytics baseline. Know your typical CTR and retention so you can spot meaningful shifts.

During the Test

- Don’t change other variables. Resist editing descriptions, tags, or end screens mid-test if you can avoid it.

- Give it time. Let the system gather enough data; a day or two might be too short for small channels.

- Watch different surfaces. Results can vary by traffic source (Home, Suggested, Search). Keep that in mind.

- Log your tests. Track variants, dates, and results in a simple spreadsheet to build your own library of learnings.

After the Test

- Adopt the winner. Update the video with the winning title/thumbnail permanently.

- Reflect on why. What language, structure, or imagery worked? Add those patterns to your playbook.

- Plan the next test. Use what you learned to design the next, more refined experiment.

Common Pitfalls (And How to Avoid Them)

- Clickbait temptation. It may bump CTR for a moment but usually harms retention and trust. Long term, the algorithm weighs satisfaction.

- Testing too many things at once. If title, thumbnail, and timing all change, you can’t attribute the result.

- Calling winners too early. Variance is noisy. Wait for adequate impressions and a consistent trend.

- Ignoring audience segments. A title that attracts broadly may not attract the right people for your niche. Balance scale with fit.

- Inconsistent branding. Wildly different visual styles might hurt recognition. Iterate within a coherent brand system.

Optimisation Tips Specifically for Titles

- Front-load the hook: “Stop Wasting Money on…” rather than “Here’s Why You Should Stop…”

- Use active language: “Build”, “Fix”, “Save”, “Master”, “Win”.

- Clarity over cleverness: A clear promise beats a pun nine times out of ten.

- Be audience-aware: Mirror the words your viewers use in comments and search queries.

- Leverage contrast: Juxtapose expectation and outcome—“I Spent £0 on Ads and Got 100K Views”.

What If You Don’t Have the Feature Yet?

Because this is a beta, many creators won’t see it immediately. Until it rolls out more broadly, you can simulate the spirit of A/B testing:

- Manual rotations: Change the title for a defined period (e.g., 48–72 hours), log metrics, then rotate to another variant.

- Community tests: Post two thumbnail mock-ups and poll your audience. Not definitive, but useful directional feedback.

- Search-based insights: Use YouTube’s research tools and Google Trends to see the language your audience prefers.

- Pilot on Shorts: Test hooks and phrasing in Shorts titles to gauge interest, then port the learnings to long-form.

These methods aren’t as precise as YouTube’s built-in experiments, but they keep your optimisation muscles working until you get access.

My First Run: What I Did and What I’ll Watch For

Here’s the recap of my initial setup, with added context:

- I clicked into the new “A/B testing” prompt (early access) and chose to test titles and thumbnails together. This is great for a first look but a bit noisy for learning. In future, I’ll isolate variables more often.

- I generated three titles and extra thumbnails using my own AI-powered tool. It can even upload variants directly to YouTube, which speeds up iteration. I’ll share a deeper dive on the tool in a separate post/video.

- Some titles and thumbnails weren’t perfectly matched due to rapid generation. I’ll tighten alignment in upcoming tests to increase clarity and reduce mixed signals.

- I set the test and saved it. Now the waiting begins: first for impressions, then for a statistically meaningful outcome. I’m expecting CTR to move first, followed by retention patterns that confirm or temper the “win”.

Once the results populate, I’ll be looking for:

- CTR lift without retention drop. That’s the gold standard.

- Audience source differences. If Home favours one title but Search favours another, that tells me something about intent.

- Geography and device differences. Mobile truncation can change which title wins.

Based on the outcome, I’ll either lock in the winner and move on to the next video, or I’ll test a second round with refined variants that lean into what worked (e.g., more specificity, a stronger action verb, or a clearer numeric promise).

Ethical Click Optimisation: Growth Without the Gimmicks

Optimising titles and thumbnails can slide into clickbait if you’re not careful. Resist. The platform is smart at detecting misaligned promises, and your audience is even smarter. A good rule: would the viewer feel pleasantly surprised by how much the video delivers relative to the title? Aim to slightly under-promise and over-deliver. Trust builds channels; gimmicks build churn.

A Checklist You Can Use for Every Test

- Goal set? (e.g., “Increase CTR by 10% on Home surface.”)

- Single variable chosen? (Title only or thumbnail only?)

- Three distinct variants drafted?

- Title–thumbnail alignment verified?

- Main keyword near the start of the title?

- Most important words within the first 45–60 characters?

- Strong emotional or specific hook present?

- Hypothesis recorded in your log?

- Test duration planned (at least one typical traffic cycle)?

- Decision rule set (e.g., “Adopt winner after 95% confidence or after X impressions if clear trend”)?

Final Thoughts: Beta Today, Baseline Tomorrow

This A/B testing capability for titles (and thumbnails) is a big step towards evidence-based creative on YouTube. It doesn’t replace intuition; it trains it. If you’ve got early access, try it now—even a simple test can reveal helpful patterns. If you haven’t got it yet, use manual methods in the meantime and get your ideation systems in place so you can move fast when it rolls out.

I’ll keep experimenting and report back with learnings, including a walkthrough of the AI tool I’m using to generate and upload variants. In the meantime, if you’ve got access, I’d love to hear how your tests are going—drop your experiences and results in the comments. Let’s build a shared playbook for titles and thumbnails that earn the click honestly and keep people watching.

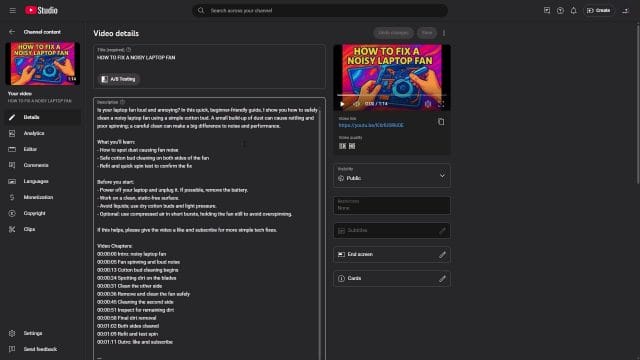

Above: The feature appeared in my Studio with a prompt, since I’m in the early access group. If you don’t see this yet, sit tight—it’s rolling out gradually.

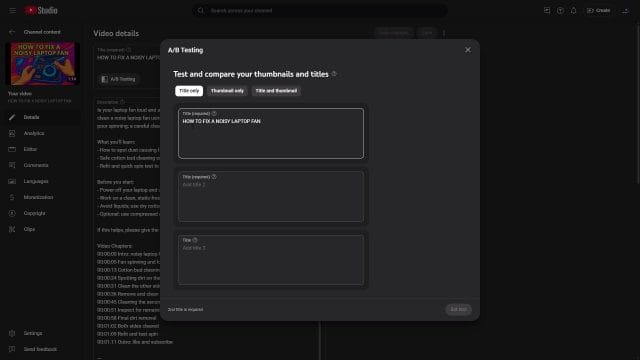

Above: The experiment interface indicates you can compare titles and thumbnails. You can add up to three variants for each and select whether you’re testing one element or both together.

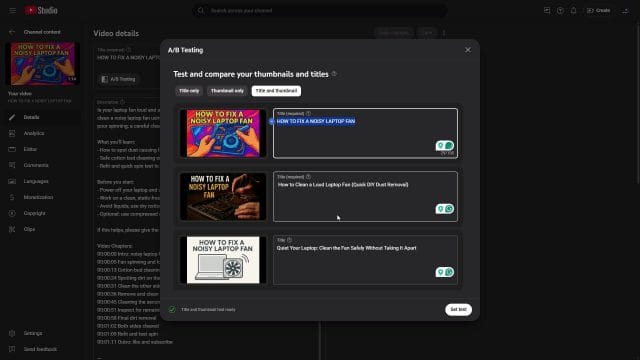

Above: I opted to test both titles and thumbnails for the first run. Great for quick wins, though isolating variables is better for clear learnings.

Above: Thumbnails were generated programmatically. In future tests, I’ll ensure tighter title–thumbnail alignment for cleaner results.

Above: After saving, the test runs automatically. Analytics will display results when there’s enough data to be meaningful. Remember: it’s beta, so interfaces and metrics may shift as YouTube refines the experience.

If this article helped you in any way and you want to show your appreciation, I am more than happy to receive donations through PayPal. This will help me maintain and improve this website so I can help more people out there. Thank you for your help.

HELP OTHERS AND SHARE THIS ARTICLE

LEAVE A COMMENT

I am an entrepreneur based in Sydney Australia. I was born in Vietnam, grew up in Italy and currently residing in Australia. I started my first business venture Advertise Me from a random idea and have never looked back since. My passion is in the digital space, affiliate marketing, fitness and I launched several digital products. You will find these on the portfolio page.

I’ve decided to change from a Vegetarian to a Vegan diet and started a website called Veggie Meals.

I started this blog so I could leave a digital footprint of my random thoughts, ideas and life in general.

If any of the articles helped you in any way, please donate. Thank you for your help.

Affiliate Compensated: there are some articles with links to products or services that I may receive a commission.